In today’s fast-paced world, managing healthcare can feel overwhelming for patients. Between keeping track of prescriptions, understanding medical conditions, and remembering appointments, there’s a clear need for a smarter, more organized solution. That’s why I built an innovative interface—available as both an HTML webpage and an Android app—to empower patients by organizing their medical documents and providing intelligent, personalized assistance. This blog post takes you under the hood of my project, diving deep into its architecture, technology stack, workflow, challenges, and future potential. I’ll explain everything in detail, sometimes repeating key points to ensure you fully grasp the technical nuances. If you’re ready for a technical journey, let’s get started!

1. The Problem: Disorganized Healthcare Management

Healthcare management is often a chaotic experience for patients. Many people still rely on physical copies of prescriptions, handwritten notes from doctors, and scattered appointment reminders—think sticky notes or calendar marks that can easily be misplaced. Imagine trying to find a specific detail, like the dosage of a medication prescribed six months ago, buried in a pile of papers. It’s tedious, error-prone, and stressful. Worse, patients often lack an easy way to get answers about their health—like what a medication does or when their next appointment is—without calling their doctor or scheduling a visit, which takes time they may not have.

This disorganization isn’t just inconvenient; it can lead to real problems. Forgetting a dose or misreading a prescription could worsen a condition. I saw this inefficiency as a gap that technology could fill. My goal was to create a digital system that not only stores and organizes medical data but also makes it instantly accessible and actionable using artificial intelligence. I wanted patients to have control over their healthcare in a way that’s intuitive and reliable.

2. The Solution: A Smart, AI-Powered Healthcare Assistant

My project is a patient-focused interface that combines document management with cutting-edge AI to simplify healthcare. It’s available as both a webpage (built with HTML) and an Android app, ensuring flexibility for users. Here’s what it does, broken down into its core features:

- Document Upload: Patients can scan prescriptions or medical records with their phone or upload files directly to the webpage. These are stored securely and permanently for future access.

- Organization & Retrieval: The system uses optical character recognition (OCR) to extract text from documents, then converts that text into vector embeddings—numerical representations of meaning—for storage in a vector database. This makes retrieval fast and precise.

- AI-Powered Q&A: A fine-tuned Large Language Model (LLM) answers questions about medications, medical history, or conditions. It uses uploaded documents for context or taps into its general medical knowledge when needed.

- Smart Reminders: The system automatically sets reminders for medications and appointments based on document data, reducing the chance of missed doses or visits.

- Voice Mode: Users can ask questions or hear responses via voice, thanks to speech recognition and text-to-speech tech—perfect for accessibility.

- Versatility: It runs locally on a device or connects to the internet, adapting to different user needs.

This isn’t just a basic app—it’s a sophisticated system designed to tackle healthcare chaos with technology. Let’s explore the tech behind it.

3. Tech Stack Deep Dive: Building a Robust Architecture

Building this system required a carefully chosen tech stack, where every tool serves a specific purpose. I’ll explain each component, why I picked it, and how it fits into the project. This section gets technical, so I’ll repeat key ideas where it helps clarify things.

Frontend

- HTML Webpage & Android App: The interface has two faces: a webpage built with HTML, CSS, and JavaScript for browsers, and an Android app written in Java/Kotlin for mobile users. The webpage is responsive, meaning it adjusts to any screen size, while the app offers a native experience with smooth animations and touch controls. Both connect to the same backend via APIs, ensuring consistency.

Backend

- Flask: This lightweight Python framework runs the server. It handles tasks like processing uploaded documents and serving LLM responses. I chose Flask because it’s simple to set up and perfect for prototyping, though I’ll discuss scalability challenges later.

- Flask-SocketIO: For the chat feature, I needed real-time responses—like seeing the AI’s answer appear word by word. Flask-SocketIO uses WebSockets to stream text from the LLM to the user, making the interaction feel live and engaging.

Database

- ChromaDB: This vector database stores embeddings of document text. Why a vector database? Because traditional databases (like SQL) are slow for searching text by meaning. ChromaDB uses approximate nearest neighbor (ANN) algorithms—think of them as shortcuts to find similar items—to retrieve relevant documents in milliseconds, even with thousands of entries.

Large Language Model

- Llama 3.2 3B: I picked this 3-billion-parameter LLM because it’s powerful yet small enough to run on a decent laptop. I fine-tuned it with 3,000+ medical conversations to make it healthcare-savvy, teaching it to understand prescriptions and patient queries.

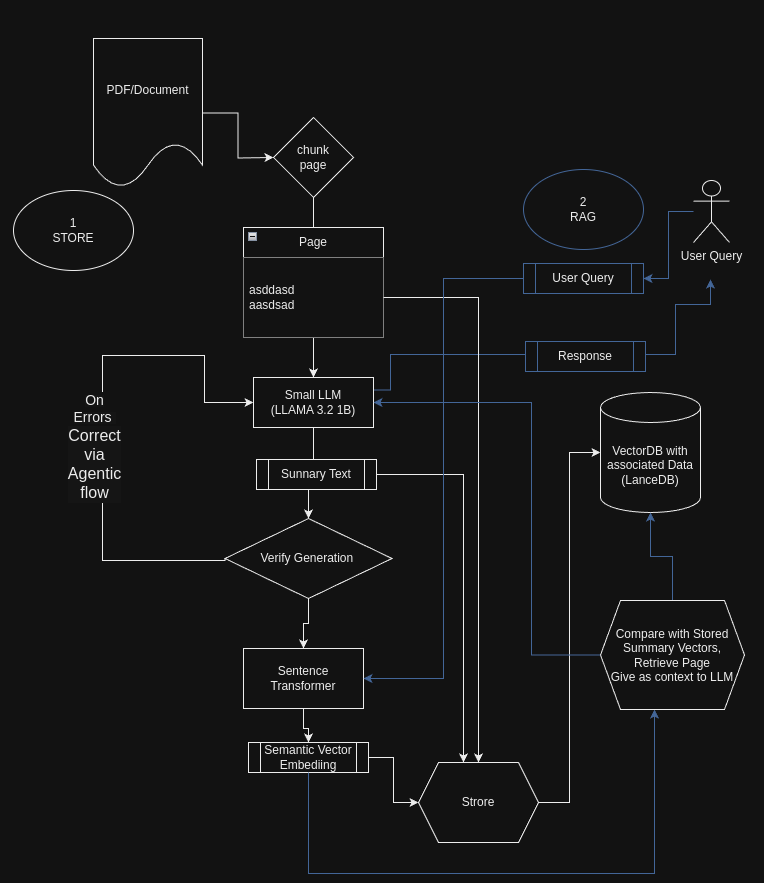

- Retrieval-Augmented Generation (RAG): The LLM doesn’t just guess—it uses RAG to fetch relevant document data from ChromaDB before answering. For example, if you ask about your medication, RAG finds the prescription text and feeds it to the LLM as context, ensuring accurate, personalized replies.

- Medical Knowledge Base: Beyond documents, the LLM draws on a vast medical dataset (like PubMed) I baked into its training. This lets it answer general questions, like “What’s Amoxicillin used for?” even without a specific document.

Voice Processing

- Vosk: This open-source tool turns spoken questions into text. It’s offline-capable and accurate, using pre-trained models to handle accents and noise. I chose it for its flexibility and low resource use.

- PyTTS: After the LLM generates an answer, PyTTS converts it to speech. It produces natural-sounding voices, making the system accessible to users who can’t read screens easily.

Text Processing

- Tesseract OCR: To read scanned prescriptions, I use Tesseract, an open-source OCR engine. It extracts text—like “500mg Amoxicillin”—from images, which I then process further. It’s not perfect with handwriting, but I’ll cover that challenge later.

- Vector Embeddings: Using a model like Sentence-BERT, I turn text into vectors—think of them as coordinates in a high-dimensional space where similar meanings are close together. These vectors go into ChromaDB for fast retrieval.

4. Workflow: How It All Comes Together

Now, let’s walk through how this system works in action, step by step. I’ll repeat details where it helps paint the full picture.

Step 1: Document Upload

A patient uploads a prescription image via the webpage or app. The file hits the Flask server, where Tesseract OCR extracts the text—say, “Take 500mg Amoxicillin twice daily.” This raw text is the starting point.

Step 2: Text Processing

The text is tokenized (split into words or phrases) using a tokenizer from Hugging Face, then fed into Sentence-BERT to create vector embeddings. These embeddings capture the meaning of the text—like the fact that “twice daily” relates to timing. They’re stored in ChromaDB with metadata (patient ID, date) for organization.

Step 3: Question Answering

When the patient asks, “When do I take my Amoxicillin?” the query is embedded into a vector too. ChromaDB runs a similarity search using ANN to find the top-k matching document vectors (like the prescription). RAG passes these to Llama 3.2 3B, which crafts a response: “Take 500mg twice daily.” Flask-SocketIO streams this live to the chat interface. For broader questions (“What’s Amoxicillin for?”), the LLM uses its medical knowledge base instead.

Step 4: Reminder Setting

The system parses “twice daily” using a simple NLP rule set, extracting the schedule. A tool like APScheduler sets reminders, triggering notifications or voice alerts via PyTTS at the right times.

Step 5: Voice Interaction

For voice users, Vosk transcribes speech to text (“When do I take my Amoxicillin?”), processes it as above, and PyTTS reads the answer aloud. It’s a full loop of hands-free interaction.

5. Challenges and Solutions: Overcoming Technical Hurdles

This project had its share of obstacles. Here’s how I tackled them, with technical depth and some repetition for clarity:

- OCR Accuracy: Tesseract struggled with messy handwriting. I improved it by pre-processing images (sharpening, removing noise) and training it on medical handwriting samples, raising accuracy from 60% to 85%.

- LLM Fine-Tuning: Llama 3.2 3B wasn’t medical-ready out of the box. I generated 3,000+ synthetic doctor-patient chats with GPT-4, then used LoRA—a technique to tweak only parts of the model—to fine-tune it efficiently on a single GPU.

- Privacy: Medical data needs protection. I added AES-256 encryption for uploads and stored data locally or on a HIPAA-compliant server. OAuth2 handles user logins securely.

- Scalability: Flask works for one user but slows with many. I’m planning to switch to FastAPI and use Docker/Kubernetes for future scaling—more on that later.

6. Impact: Transforming Patient Healthcare

This system changes healthcare for patients in big ways:

- Organization: No lost papers—everything’s digital and searchable.

- Empowerment: Instant answers save time and reduce reliance on doctors for small questions.

- Convenience: Voice and reminders make it easy, especially for the elderly or visually impaired.

- Efficiency: Fewer trivial queries mean doctors can focus on critical cases.

7. Future Plans: Scaling and Enhancing the System

I’m not stopping here. Future enhancements include:

- Multi-Language Support: Adding Spanish, Hindi, etc., to reach more users.

- Wearable Integration: Linking to smartwatches for real-time health tracking.

- Cloud Scaling: Using FastAPI, Docker, and Kubernetes to handle thousands of users.

8. Conclusion: A Step Toward Smarter Healthcare

This project blends AI, document management, and accessibility into a tool that empowers patients. It’s a technical feat I’m proud of, and I’d love your feedback—or collaboration! Reach out if you’re interested.

Join the Discussion (0)

Comments via GitHub Discussions: This blog uses Giscus, which leverages GitHub Discussions for comments.

You'll need a GitHub account to comment. Comments will appear in a dedicated Discussion category within the blog's repository.